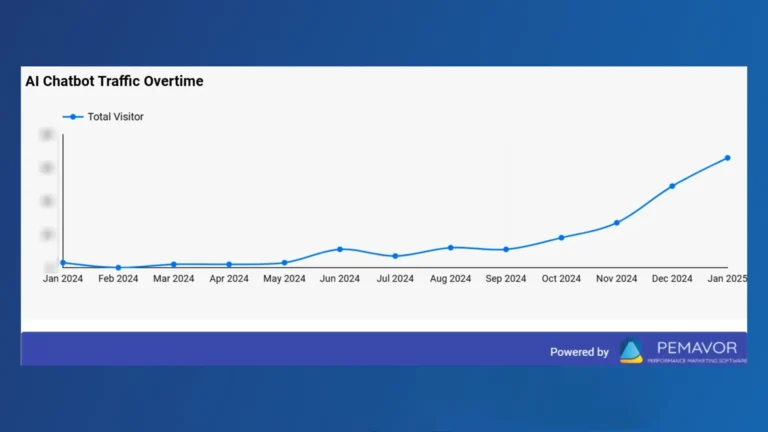

According to a McKinsey study, 65% of organizations use generative AI in at least one business function. However, many businesses have struggled to connect AI with their existing data.

In this phase, LLM (Large Language Model) SaaS (Software as a Service) providers help by offering simple, easy-to-use AI solutions. Thanks to those platforms, businesses can cut costs and save time without hiring experts or creating complicated systems. As a result, they can focus on their strategic goals.

What is LLM SaaS?

LLM SaaS helps businesses adopt AI without high costs or long development times. It provides tools to integrate advanced AI into operations quickly and efficiently.

-

- Faster setup: Businesses can start using AI in weeks, not months.

-

- Lower costs: Saves money by cutting the need for costly infrastructure and teams.

-

- Customization options: Allows fine-tuning to match specific business needs.

With this practical solution, you can build AI-driven systems effortlessly.

However, what about challenges?

LLM SaaS offers many benefits but may have come with challenges like ensuring data privacy, handling large model demands, and aligning AI with business goals.

Why choose SaaS for hosting LLMs on your data?

The benefits of these solutions span both technical performance and strategic value, addressing businesses’ unique needs. This approach can enhance operational efficiency.

Let’s take a closer look at the most important ones.

Data security in SaaS LLMs

As noted earlier, data privacy can be a challenge. However, reputable companies are taking steps to address this. SaaS platforms prioritize protecting sensitive information through:

-

- Encryption: Protects data during transmission and storage, keeping it safe from unauthorized access.

-

- Compliance standards: Follows rules like GDPR and HIPAA to meet industry standards.

-

- Access control: Limits access to sensitive data to authorized users only.

Optimization strategies for private data

Additionally, LLM SaaS is designed to optimize performance while maintaining privacy and control.

-

- APIs let businesses connect LLMs to their existing systems, ensuring smooth and efficient operations.

-

- Businesses can adjust models to fit their specific needs, achieving better results without extra resource demands.

-

- SaaS platforms securely train and run models on private datasets, keeping data confidential while improving accuracy.

How SaaS enables LLM deployments on private data

At its core, SaaS acts as a bridge. It gives businesses the tools to use AI while securing their data.

To run LLMs on private data, businesses must first clean and organize the data for better performance. From there, fine-tuning the models tailors them to specific tasks, while APIs make it easy to connect AI tools with existing systems.

Deployment options vary depending on business needs. Free SaaS works well for testing smaller applications, while premium versions offer advanced features for larger businesses. Overall, SaaS makes AI more accessible by providing flexible, scalable solutions.

Some well-known providers include OpenAI, Cohere, and Anthropic. OpenAI offers APIs for advanced LLMs, Cohere focuses on customizable models with strong privacy features, and Anthropic prioritizes safe and ethical AI.

Core features of SaaS LLM providers

By focusing on these features, businesses can ensure their SaaS LLM provider meets both current and future needs.

Ease of deployment and integration

-

- Quick setup with minimal technical effort.

-

- Smooth integration with existing workflows.

-

- Tailored solutions for unique business needs.

Data security and compliance

-

- Encryption to protect proprietary information.

-

- Meets GDPR, HIPAA, and other standards.

-

- Reliable environments for sensitive data.

Scalability for growing needs

-

- Handles large data volumes as your business grows.

-

- Supports evolving use cases and processing demands.

Performance optimization

-

- Improve LLM efficiency and outcomes.

-

- Optimize resource usage to reduce expenses.

The top SaaS providers for running LLMs on private data

When choosing a SaaS provider for hosting LLMs, it’s essential to evaluate both established giants and emerging players. Here’s a list of top providers and what they bring to the table:

AWS SageMaker

A comprehensive platform for training, hosting, and scaling custom LLMs. AWS SageMaker simplifies model deployment with integrated tools for data preparation, fine-tuning, and monitoring. Its robust infrastructure ensures scalability for businesses of all sizes.

Cost and pricing:

- Pay-as-you-go model based on compute hours, storage, and data transfer.

- Free tier for 2 months with limited resources.

- Savings Plans offer discounts but no traditional reserved instances.

Performance and efficiency:

- Optimized for LLMs with distributed training and model parallelism.

- Built-in tools for scalability and cost-efficiency.

User Experience:

- Steep learning curve, but supported by detailed tutorials and documentation.

Scalability:

- Highly scalable, supports distributed training for large datasets.

Ethics and AI governance:

- GDPR and HIPAA-compliant when configured correctly.

- Strong encryption and IAM tools for security.

Sustainability:

- Carbon-neutral goal by 2040 with energy-efficient tools.

Community and ecosystem:

- Extensive AWS integrations but less vibrant than open-source communities.

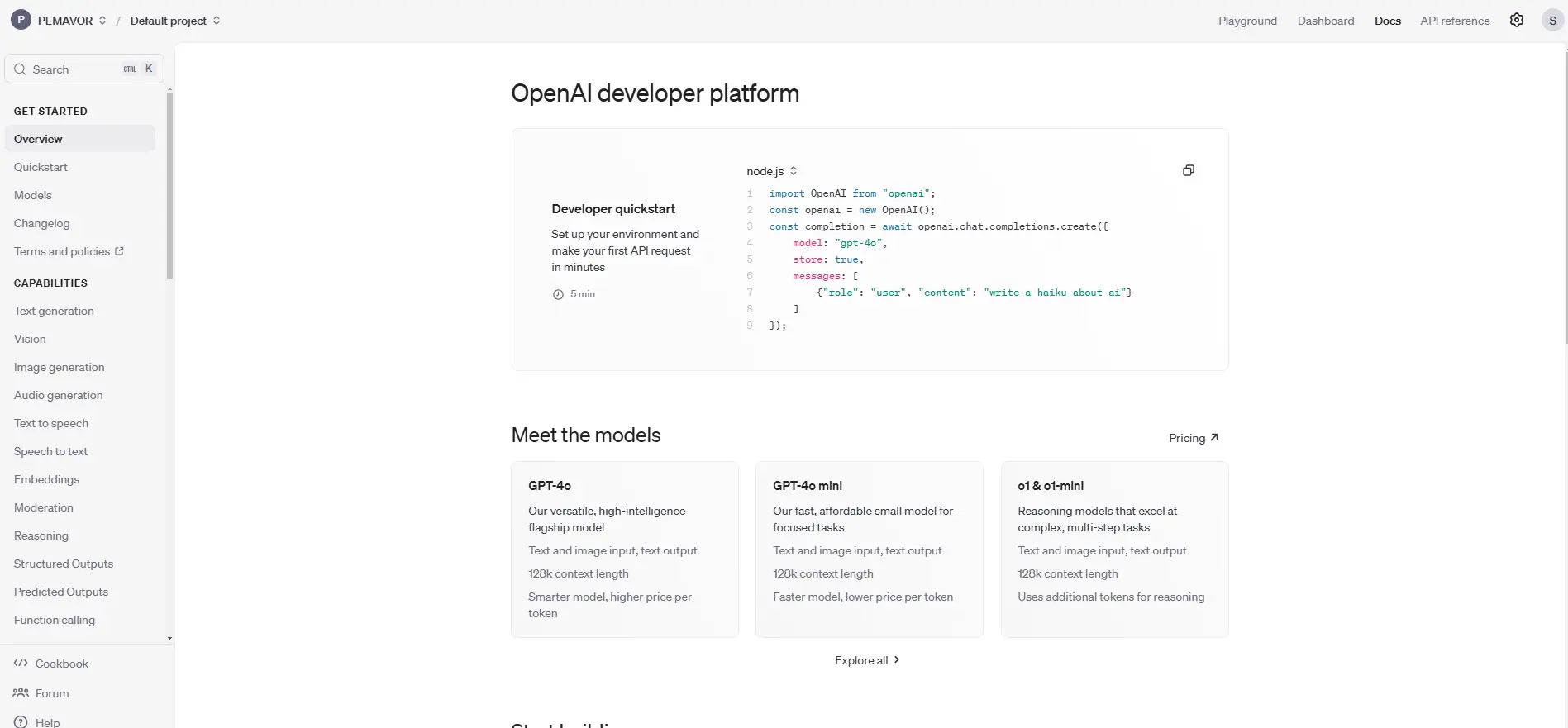

OpenAI

OpenAI provides robust APIs for leveraging advanced LLMs. Users can easily customize models and run them on private datasets. With powerful performance and extensive application options, OpenAI is particularly well-suited for businesses requiring comprehensive integrations.

Cost and pricing:

- Charges based on API usage (tokens processed).

- No free tier, but predictable pricing for different scales.

Performance and efficiency:

- Excellent performance for text-based LLMs; highly efficient but less transparent infrastructure.

User experience:

- Simple API design with great documentation; beginner-friendly but limited for customizations.

Scalability:

- Scalable API endpoints, though constrained by usage caps and token limits.

Ethics and AI governance:

- GDPR-compliant but lacks detailed transparency for industries needing strict privacy.

Sustainability:

- Limited focus on sustainability; high energy costs for training large models.

Community and ecosystem:

- Active developer community; less collaborative than open-source alternatives.

Hugging Face

This platform provides flexible options to host or integrate open-source LLMs through a SaaS model. Businesses can fine-tune Hugging Face models on their private data and use them safely, keeping control over sensitive information.

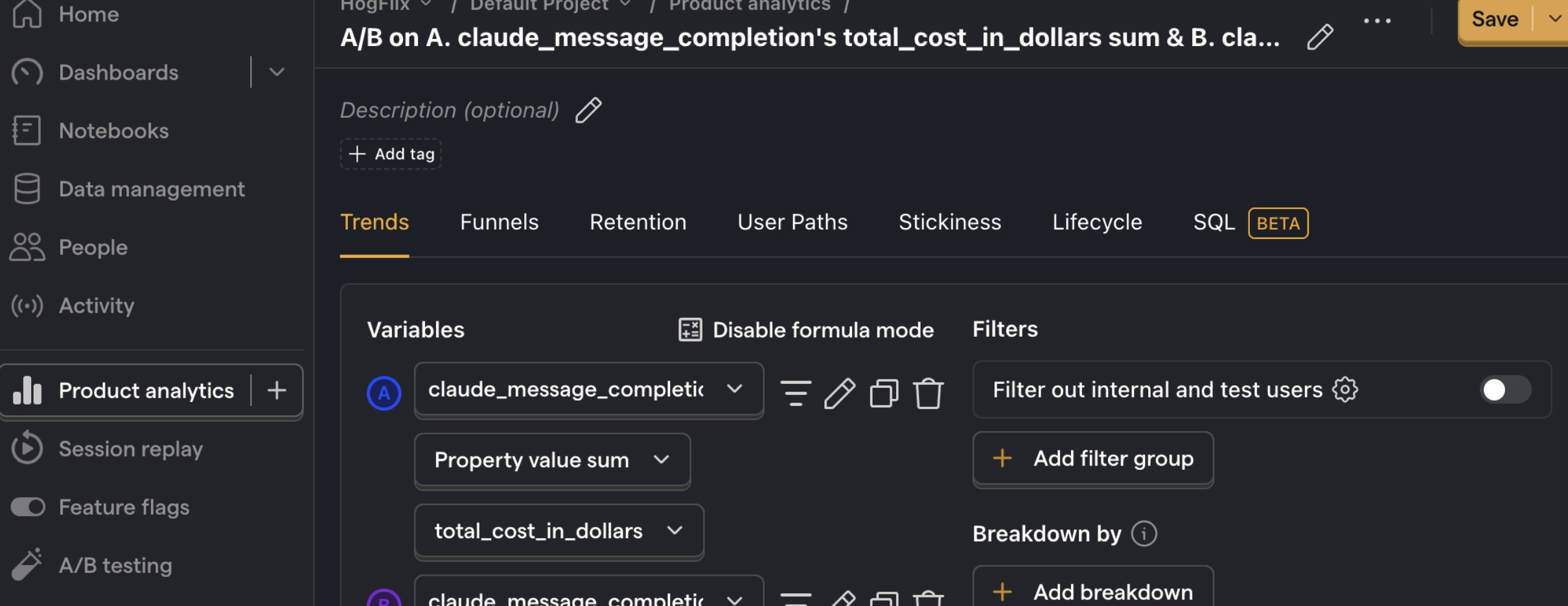

Anthropic

Anthropic specializes in building safer, more reliable LLMs. Its Claude model is designed for businesses prioritizing ethical AI applications and enhanced data privacy. Anthropic’s focus on AI safety sets it apart in the market.

Cost and pricing:

- Premium pricing tailored for enterprises prioritizing safety and privacy.

Performance and efficiency:

- Focused on AI safety and reliability, with consistent and secure performance.

User experience:

- Easy-to-use with enterprise-focused customization options.

Scalability:

- Scales securely for enterprise use, prioritizing ethical and sensitive applications.

Ethics and AI governance:

- Strong focus on ethical AI and compliance with GDPR and HIPAA standards.

Sustainability:

- Emphasizes ethical practices but offers limited transparency about energy usage.

Community and ecosystem:

- Smaller but growing community focused on ethical AI solutions.

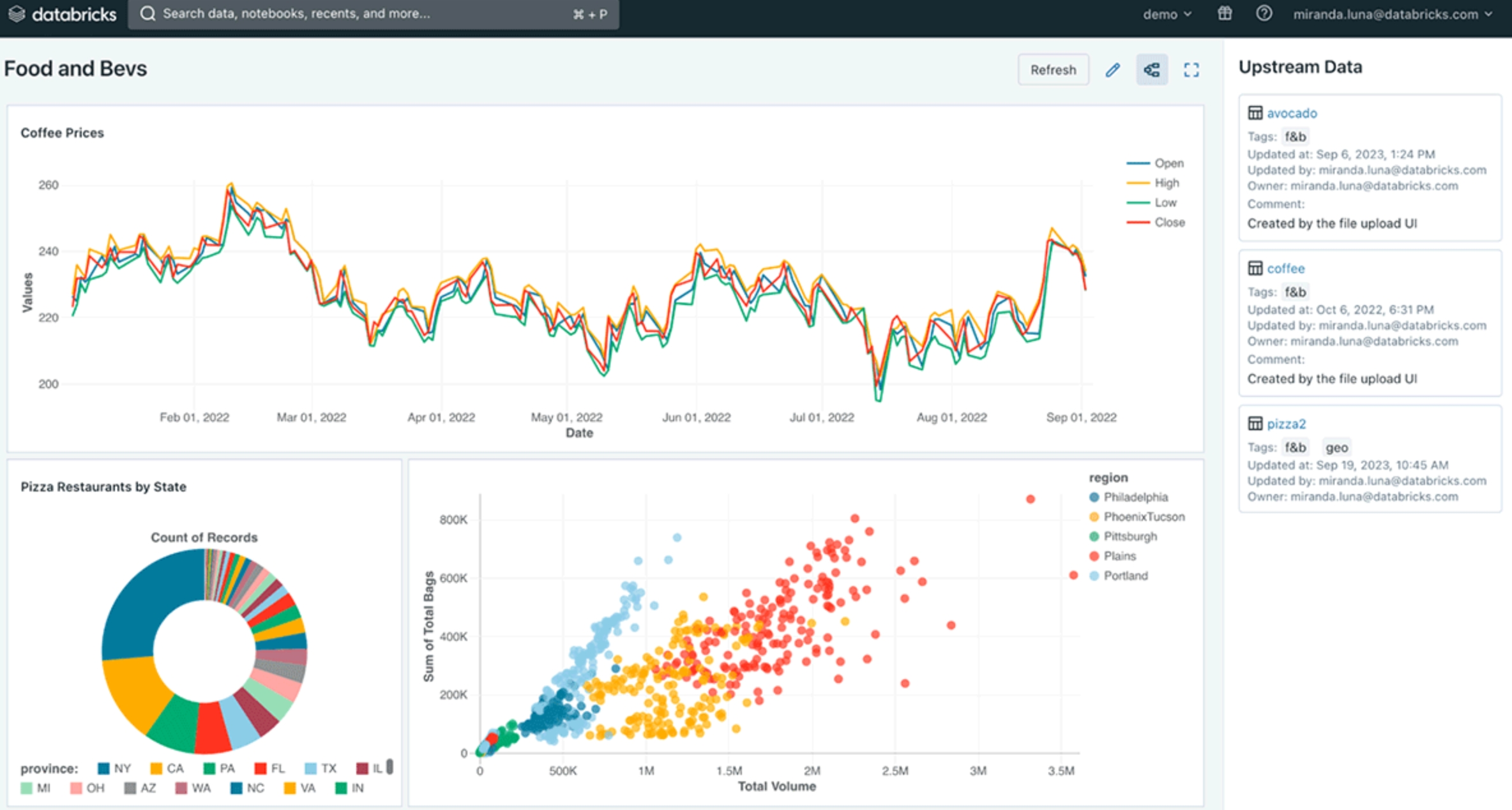

Databricks

A newer player combining data engineering with AI, Databricks enables businesses to train and deploy LLMs on private datasets efficiently. Its unified platform is designed for teams managing large-scale data and machine learning workflows.

Cost and pricing:

- Subscription-based with free trials and volume discounts.

Performance and efficiency:

- Optimized for data-heavy workflows and massive datasets.

- Strong integration with AI pipelines and analytics tools.

User experience:

- Intuitive notebooks and seamless integration with existing workflows.

Scalability:

- Exceptional scalability for big data and enterprise workloads.

Ethics and AI governance:

- GDPR and HIPAA compliant with built-in tools for auditability and data security.

Sustainability:

- Focuses on energy optimization and holds green certifications.

Community and ecosystem:

- Strong ecosystem for enterprises; frequent collaborations with academic and industry leaders.

| Feature | AWS SageMaker | OpenAI | Hugging Face | Anthropic | Databricks |

|---|---|---|---|---|---|

| Cost and pricing | Pay-as-you-go; free tier (2 months); discounts via Savings Plans. | Usage-based pricing (tokens); no free tier. | Flexible plans; free tier with limits. | Premium pricing for safety-focused enterprises. | Subscription-based; free trials and discounts. |

| Performance and efficiency | Optimized for LLMs; distributed training and scalability tools. | High efficiency for text; less transparent. | Pre-trained, customizable models for private data. | Reliable, secure AI tuned for ethical use. | Optimized for big data and AI pipelines. |

| User experience | Steep learning curve; tutorials and documentation available. | Simple APIs; beginner-friendly, less customizable. | Easy-to-use; strong community resources. | Enterprise-focused, simple customization. | Intuitive notebooks; seamless integrations. |

| Scalability | Highly scalable; supports distributed training. | Scalable APIs; limited by usage caps. | Flexible for hosted and self-hosted scaling. | Secure scaling for sensitive industries. | Exceptional scalability for big data workflows. |

| Ethics and AI governance | GDPR and HIPAA compliant; strong encryption and IAM tools. | GDPR compliant; lacks full privacy transparency. | GDPR compliant; HIPAA requires extra setup. | Focuses on ethical AI; GDPR and HIPAA compliant. | GDPR and HIPAA compliant; auditability tools. |

| Sustainability | Carbon-neutral goal by 2040; energy-efficient tools. | Limited focus; high energy costs for models. | Energy-efficient tools like DistilBERT. | Ethical practices; limited energy transparency. | Energy-optimized workflows; green certifications. |

| Community and ecosystem | Extensive AWS integrations; less vibrant than open-source. | Active developer community; less collaborative. | Thriving open-source community; frequent updates. | Smaller community focused on ethical AI. | Strong enterprise ecosystem; academic ties. |

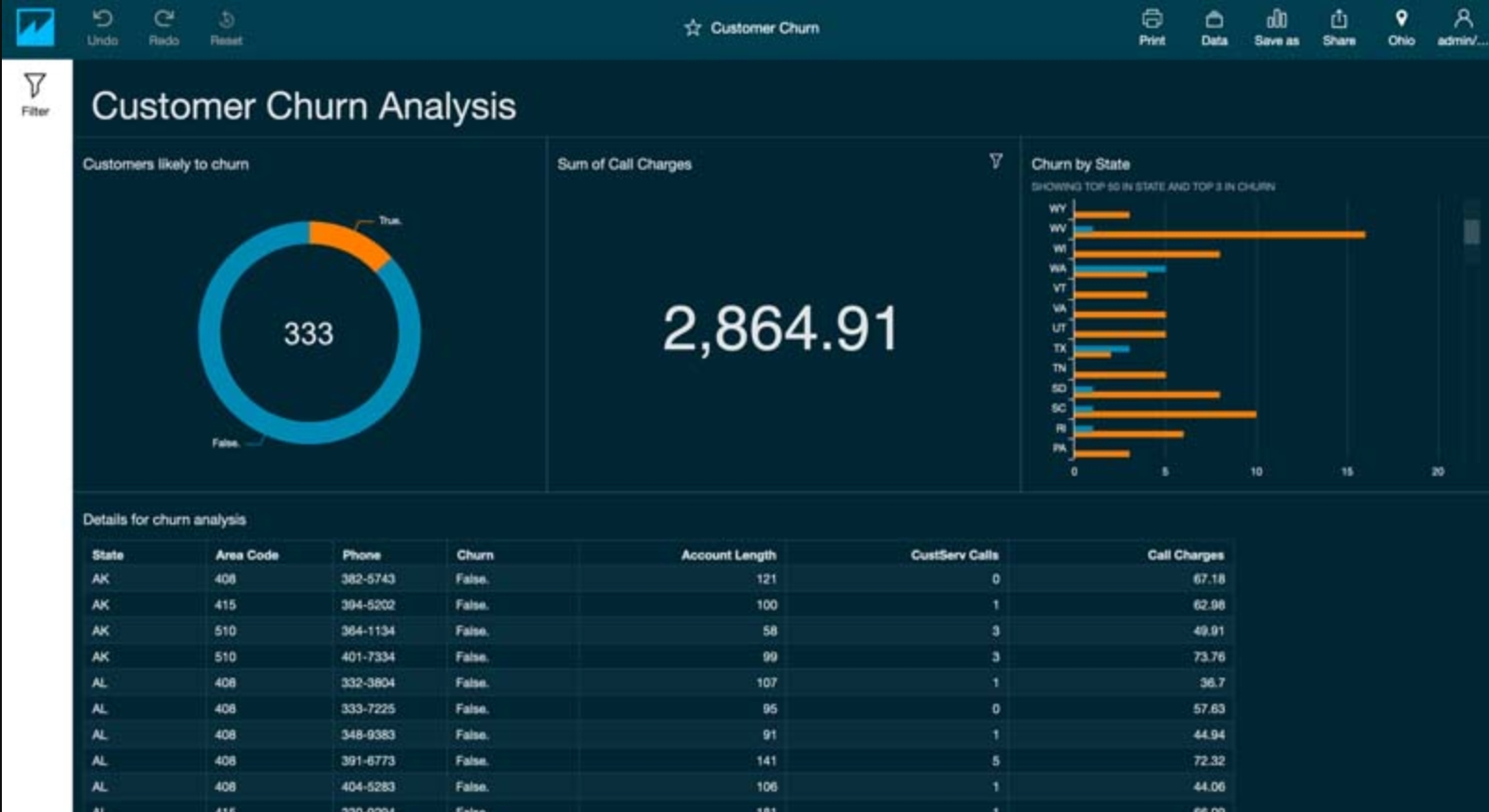

Case Studies: Successful LLM Deployments with SaaS

Now, we want to show some real-life examples of how LLM SaaS providers work.

For example, GitHub collaborated with OpenAI to develop GitHub Copilot. This tool helps developers by offering coding suggestions in real time.

In addition, Integration.app uses LLM technology to automate SaaS application integration. As a result, they simplify connections between software tools, reducing engineering hours.

Want to build your AI SaaS with LLMs?

Step-by-step guide: Building AI solutions with LLM SaaS

Creating an AI SaaS solution with large language models may seem complex, but breaking it into manageable steps makes it achievable. Here’s a clear, actionable guide:

1. Identify business needs and datasets

Start by defining the problem you want AI to solve. For example, are you looking to improve customer service, automate tasks, or analyze data? Once you have a goal, find the right datasets to train the model. Make sure the data is clean, relevant, and diverse.

2. Select the right SaaS provider

Find SaaS providers that fit your needs. Focus on:

-

- Ensure they comply with standards like GDPR or HIPAA.

-

- Check for APIs or custom integrations.

-

- Make sure they align with your budget and future growth.

-

- Test their features before making a decision.

If needed, reread our tool section to choose one of our favorites.

3. Test small-scale deployments

Start with a small, manageable use case to reduce risks. Some providers offer free or affordable options for testing. Besides, use limited datasets to deploy your LLM and evaluate its performance. This stage is an opportunity to fine-tune the setup and collect user feedback.

4. Scale and optimize for enterprise use

Lastly, scale the validated model to handle larger datasets and more complex tasks. Optimize its performance by:

-

- Adjust the LLM to better fit your needs.

-

- Use analytics tools to track efficiency and costs.

-

- Regularly update the model with high-quality data.

Building an AI SaaS solution can be manageable. Start small, focus on your goals, and work with reliable SaaS providers.

At PEMAVOR, we provide sophisticated solutions for PPC marketing with AI automation. If you want to elevate your marketing strategies, contact us.