N-grams essentially indicate the frequency of a word or sequence within our keyword list. Utilizing n-gram data enables you to identify the most popular and repetitive keywords.

Therefore, you can analyze the search patterns in your Google Search Console (GSC) data to optimize your PPC or SEO efforts.

What about n-gram analysis with BigQuery? We want to make it easy about how to use BigQuery for n-gram analysis.

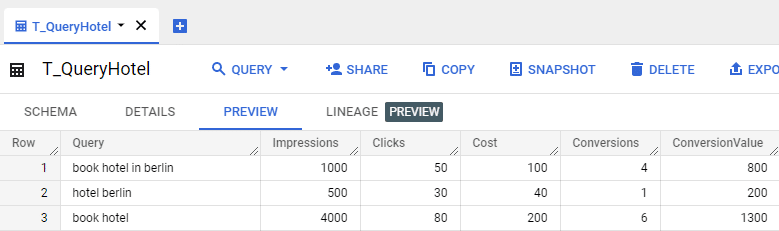

Have a look at sample data in BigQuery

Suppose we have this data in BigQuery and we need to perform n-gram analysis.

We have some queries and the performance values for each query like: Impressions, Clicks, Cost, and Conversion. In this analysis, we also calculate the aggregated performance values for each n-gram.

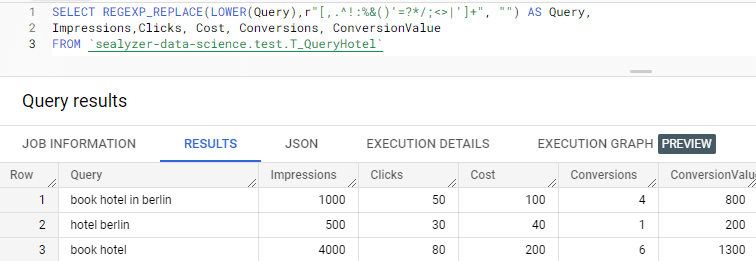

Data pre-processing step

Before running n-grams, we should clean our text data (Query field). What does cleaning mean?

- Exclude unwanted special characters like [,.^!:%&()’=?*/;<>|’] etc. You can also add any other unwanted character to exclude it according to your needs.

- Transform all texts to the same case-sensitive: Lowercase or uppercase.

The REGEXP_REPLACE function replaces the string matching the regular expression pattern with the specified string.

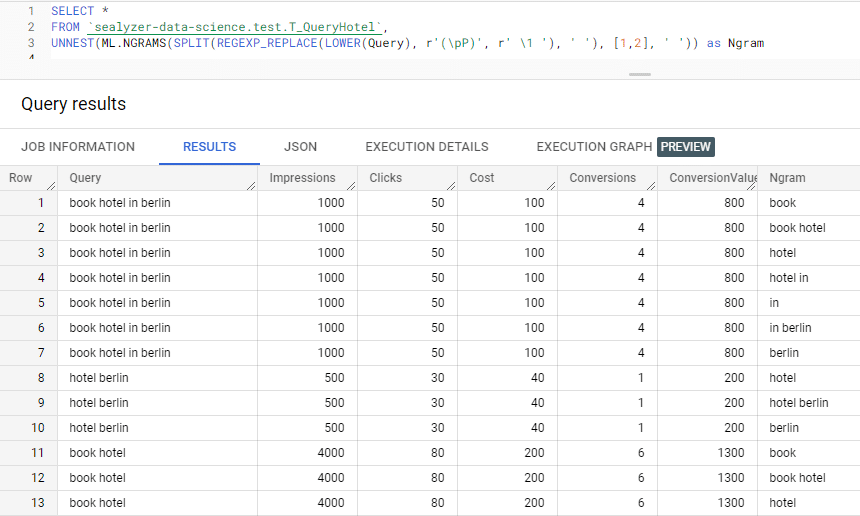

Transforming text data to n-grams using the ML.GRAMS function in BigQuery

In this transformation, we split a single line of keywords into multiple lines containing the n-gram substrings of the original keywords. We used 1-gram and 2-gram.

In our select statement, we used the ML-NGRAM function to create 1-gram and 2-gram. You can easily change this in the SQL statement to suit your needs.

In a single SQL line, the text in this query is written in Unicode lowercase and broken into spaces using Unicode punctuation rules. Since a SPLIT () results in an array under standard SQL, UNNEST () is used to resolve the results back into individual rows.

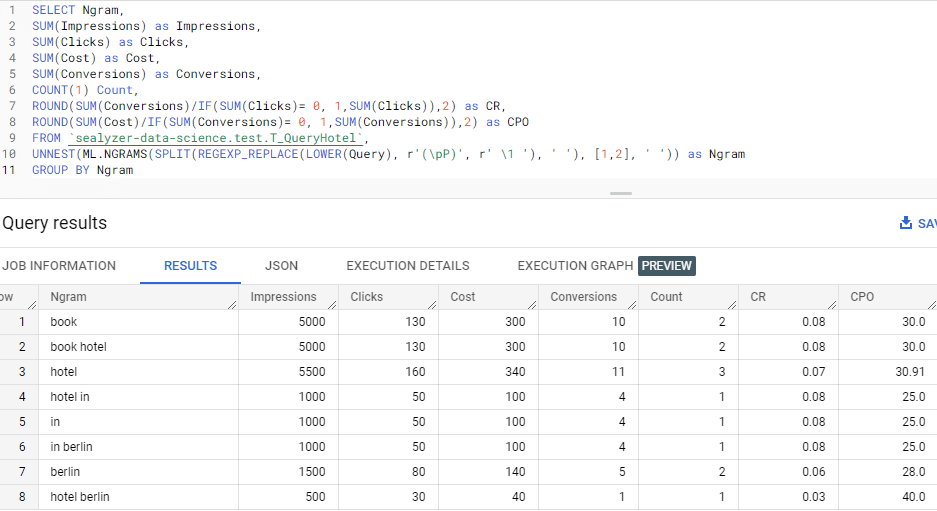

Displaying the n-gram performance values

After getting the n-grams with the original keywords, we can aggregate the performance values of Impressions, Clicks, Cost, and conversion by the corresponding n-gram. This gives us a general overview of each n-gram. We can also calculate CR (Conversion Rate) and CPO (Cost-Per-Order).

You’ll have to adjust the SQL code to your own table structure, nevertheless it makes sense to share the SQL code and copy and paste some code fragments:

SELECT Ngram, SUM(Impressions) as Impressions, SUM(Clicks) as Clicks, SUM(Cost) as Cost, SUM(Conversions) as Conversions, COUNT(1) Count, ROUND(SUM(Conversions)/IF(SUM(Clicks)= 0, 1,SUM(Clicks)),2) as CR, ROUND(SUM(Cost)/IF(SUM(Conversions)= 0, 1,SUM(Conversions)),2) as CPO FROM `sealyzer-data-science.test.T_QueryHotel`, UNNEST(ML.NGRAMS(SPLIT(REGEXP_REPLACE(LOWER(Query), r'(\pP)', r' \1 '), ' '), [1,2], ' ')) as Ngram GROUP BY Ngram

After running the script, you’ll get this output:

This approach is invaluable and scales well even with large datasets. Let’s try it out soon.

Check it out our free solution, Keywords N-Gram Analyzer. Besides, you can improve your knowledge by reading our previous article on n-gram analysis of search console queries. It shows you how to use our free Keyword N-gram Analyzer tool.

Need a custom solution? Let’s contact our experts.