Search engines rely on efficient crawling and indexing to provide relevant search results. However, websites with thousands of pages, deep navigation structures, or dynamically generated content often face indexing challenges.

With an XML sitemap, you can improve your website’s crawling efficiency by signaling which URLs are relevant and how often they are updated.

This is especially useful for:

- E-commerce websites with frequently updated product pages.

- News websites that publish time-sensitive articles.

- Large websites with complex structures.

- New websites with few backlinks, making them harder for search engines to discover.

A well-structured XML sitemap doesn’t guarantee higher rankings, but it helps search engines index your pages easier and improves visibility.

This guide covers the essentials of sitemaps and how to analyze competitor XML sitemaps to uncover on-page SEO opportunities.

How to create an XML sitemap

Before submitting your sitemap, you should create one. XML sitemap example: https://www.yourdomain.com/sitemap_index.xml

Using CMS plugins for automated sitemap generation

- WordPress: Use Rank Math, Yoast SEO or Google XML Sitemaps plugins to generate and auto-update your sitemap.

- Shopify: Shopify automatically generates a sitemap at yourdomain.com/sitemap.xml.

- Drupal: Install the XML Sitemap module to create and update sitemaps dynamically.

- BigCommerce, Wix, and other content management system (CMS) Platforms: Most platforms provide built-in sitemap generation.

Manual methods

- Use Screaming Frog SEO Spider to crawl your website and generate a sitemap.

- Use Google’s Sitemap Generator to create one from your server.

- Manually create an XML file and structure it according to Google’s guidelines.

Best practices for sitemap structuring

- According to Google’s documentation, keep your sitemap under 50MB or 50,000 URLs per file. For larger websites, consider splitting your sitemap into multiple smaller files and submitting them as an index sitemap.

- Use clean, readable URL structures (avoid excessive parameters).

- Segment large sites by content type (e.g., blogs, products, categories).

- Ensure your sitemap includes only indexable URLs (avoid noindex, blocked, or duplicate pages).

How to submit & optimize an XML sitemap for SEO

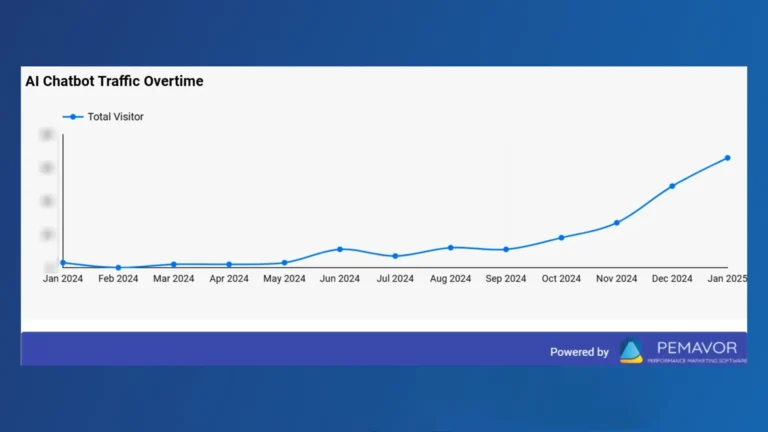

XML sitemaps serve as a roadmap for search engines and other indexing platforms, including Large Language Models (LLMs). While search engines use sitemaps to efficiently discover and crawl pages, LLMs can also use them to better understand website content structure and improve AI-driven search experiences. As AI-powered tools become more prevalent, ensuring your XML sitemap is well-structured can enhance both traditional search engine visibility and AI-powered content discovery.

Each search engine requires a separate submission process.

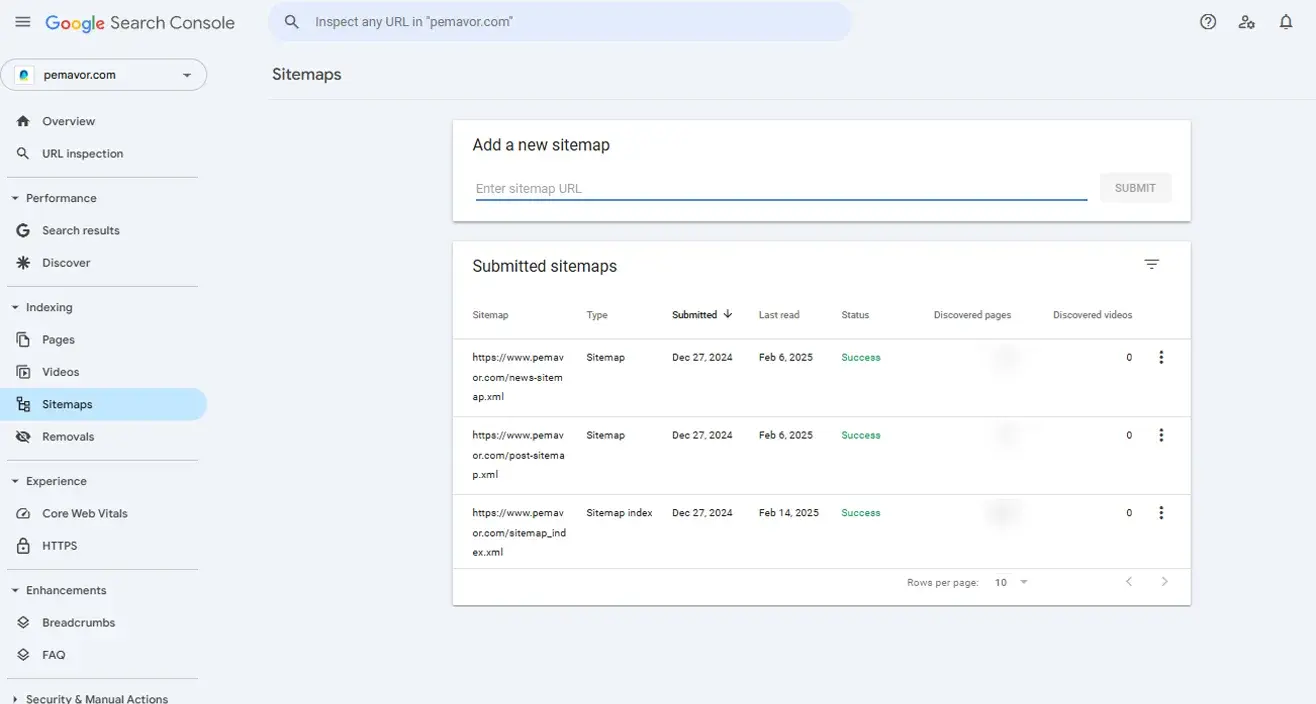

Google Search Console

- Log in to Google Search Console.

- In the left menu, click “Sitemaps” under “Indexing.”

- Enter your sitemap URL. In the “Add a new sitemap” field, input the full URL.

- Click “Submit”. If the submission is successful, Google will queue your sitemap for crawling.

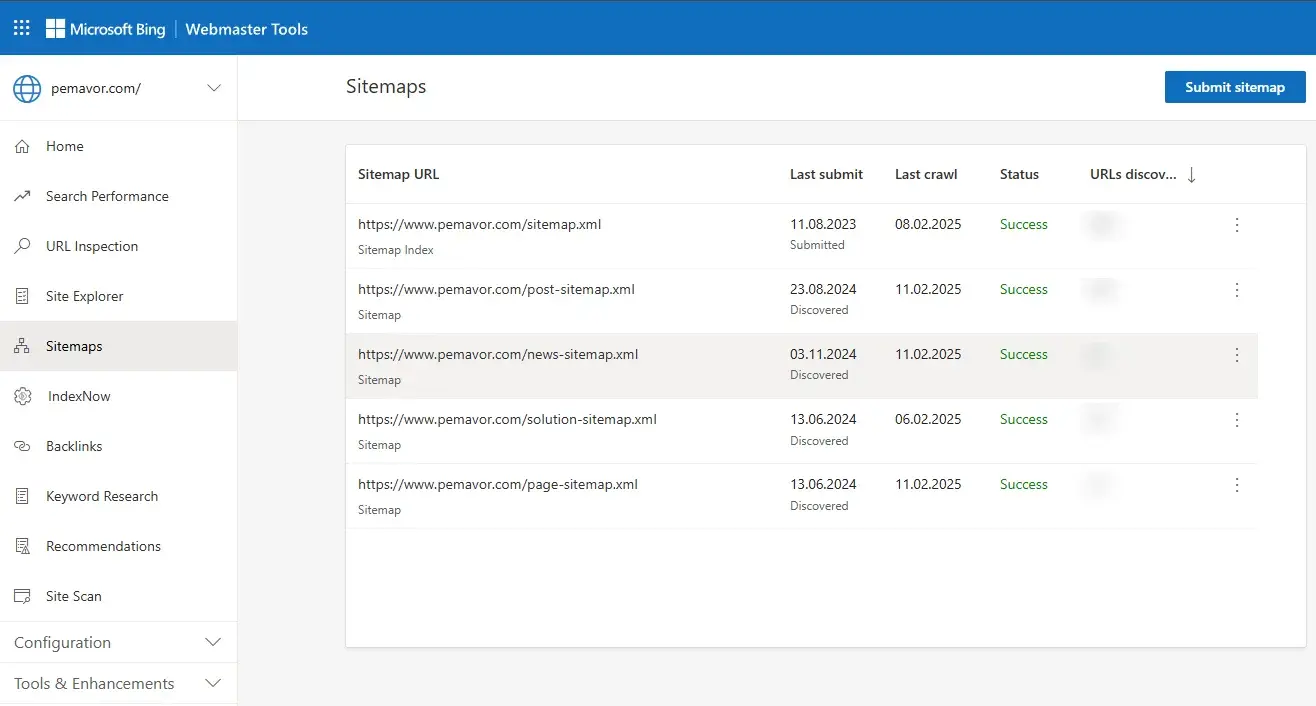

Bing Webmaster Tools

- Sign in to Bing Webmaster Tools.

- In the left menu, click “Sitemaps”.

- Paste your sitemap URL and click “Submit.”

- Check the status. Bing will indicate whether the submission was successful or if there are any issues.

If your website is already verified in Google Search Console, Bing allows automatic sitemap submission to simplify the process.

Internal linking & URL structuring

Search engines rely on efficient internal linking and structured URLs to crawl a website effectively.

SEO-friendly URL structures

- Keep URLs short, readable, and keyword-rich.

- Avoid dynamic parameters (

?id=1234) in indexed URLs. - Use hyphens (-) instead of underscores (_) or spaces.

Internal linking for better indexation

- Ensure important pages have strong internal links.

- Use breadcrumb navigation to improve structure.

- Link deep pages (product pages, blog posts) to important categories or hubs.

Pagination & parameter handling

- Ensure paginated pages link back to main categories to distribute link equity.

- Use Robots.txt to block unnecessary parameters to control crawl behavior.

User-agent: * Disallow: /*?id=1234 Disallow: /*?filter=

Common XML sitemap errors & how to fix them

Even after submitting a sitemap, issues may arise. Here are common XML sitemap errors and how to fix them:

| Error | Cause | Solution |

|---|---|---|

| “Indexed, though blocked by robots.txt” | Sitemap contains URLs that are blocked by robots.txt. |

Check robots.txt to ensure that critical pages aren’t disallowed. Remove blocked URLs from the sitemap if they shouldn’t be indexed. |

| “Submitted but not indexed” | Google doesn’t find the URLs valuable or there are crawling issues. | Check the URL in Google Search Console (URL Inspection Tool) to see why it’s not indexed. Improve content quality, ensure proper internal linking, and avoid noindex tags. |

| “Sitemap too large” | Exceeds Google’s limit of 50MB or 50,000 URLs. | Split the sitemap into multiple files and submit a sitemap index file referencing them. |

| Soft 404s in sitemaps | Pages listed in the sitemap return soft 404 errors (not found, but no proper 404 response). | Remove non-existent or low-quality pages from the sitemap. Ensure actual 404 pages return the correct HTTP status code. |

| “Couldn’t fetch sitemap” | Google can’t access the sitemap file. | Check if the sitemap URL is correct and publicly accessible. Test with GSC’s Sitemap Testing Tool. |

| “Sitemap contains non-canonical URLs” | URLs in the sitemap don’t match the canonical version. | Ensure that all URLs in the sitemap are self-referential (match their canonical tag). Avoid redirects. |

| “URLs returning 403/404/500 errors” | Some sitemap URLs return 403 (Forbidden), 404 (Not Found), or 500 (Server Error). | Fix server errors, ensure pages exist, and check access permissions. |

| “Duplicate URLs in sitemap” | The same page appears multiple times in the sitemap. | Ensure that only one version of each URL is listed (preferably the canonical version). |

| “Multiple sitemaps not referenced in sitemap index” | If multiple sitemaps exist, they are not listed in a sitemap index. | Submit a sitemap index file that references all individual sitemaps. |

| “URLs in sitemap are not HTTPS” | HTTP URLs are used instead of HTTPS. | Ensure all URLs use HTTPS if the site has an SSL certificate. |

| “Non-200 response codes in sitemap” | URLs return redirects (301/302) or error codes. | Only include URLs that return 200 OK status in the sitemap. |

| “Non-HTML pages in sitemap” | Sitemap includes PDFs, images, or other non-web pages incorrectly. | Use a separate image/video sitemap for non-HTML content if necessary. |

| “Excluded by ‘noindex’ tag” | Sitemap includes pages marked as noindex. | Remove noindex pages from the sitemap, or remove the noindex tag if the page should be indexed. |

You can diagnose these issues in Google Search Console under the Coverage report and take appropriate action accordingly.

Automation & efficiency in managing sitemaps

Managing sitemaps manually can be time-consuming, especially for large websites with frequently updated content. Automating the process ensures that search engines always have access to the most recent and relevant URLs. Here’s how you can automate sitemap updates across different CMS platforms.

| CMS | Suggested Plugins/Modules | More Info |

|---|---|---|

| WordPress | ||

| Shopify | ❌ | Shopify Docs |

| HubSpot | ❌ | HubSpot Knowledge Base |

| Drupal | XML Sitemap Module | Drupal Module |

| Magento | ❌ | Magento Blog |

| Wix | The Wix SEO Wiz | Wix Help Center |

| Joomla | JSitemap | Joomla Sitemap Guide |

| BigCommerce | ❌ | BigCommerce Docs |

| GitHub | PrestaShop sitemap | Belvf Blog |

| Showare | ❌ | Shopware Guides |

How can you ensure your sitemap stays updated automatically?

- Use CMS plugins: Most platforms have dedicated plugins that automatically generate and update sitemaps.

- Set up a cron job (for custom sites): If using a custom-built website, schedule a cron job to regenerate the sitemap regularly.

- Enable Google Search Console auto-submission: Ensure Google is notified whenever there’s a sitemap update.

For a detailed guide on automating sitemap updates across different CMS platforms, check out Delante’s guide.

How important is a sitemap for SEO?

With a well-structured sitemap, you can boost your website’s SEO performance. Below are essential best practices to maximize your sitemap’s impact on SEO.

Prioritizing high-value pages and avoiding duplicate content

Search engines don’t treat all pages equally, and neither should your sitemap. Prioritize indexing the pages that provide the most value. Low-value pages, such as thin content, outdated posts, or duplicate versions, should be excluded.

- Include key landing pages, high-traffic blog posts, product pages, and service pages.

- Exclude duplicate, paginated, and filter-generated URLs that don’t add SEO value.

- Use canonical tags to indicate the preferred version of a page and prevent search engines from indexing multiple variations.

The Role of lastmod, priority, and changefreq attributes

These optional attributes in XML sitemaps provide search engines with additional context about your content.

lastmod → Specifies the last time a page was updated, helping search engines prioritize crawling for frequently updated pages (e.g., news articles or active blogs).

priority → Originally designed to assign importance to pages on a scale from 0.1 to 1.0. Google ignores priority attribute, but some search engines like Bing and Yandex may still use it as a minor crawling hint. However, internal linking and structured site architecture remain the most reliable ways to influence crawling behavior.

changefreq → Designed to inform search engines how often a page is expected to change (daily, weekly, monthly). However, just like the priority attribute, it is no longer used by Google for crawling decisions. Google instead relies on lastmod and actual content updates. Some search engines, like Bing and Yandex, may still consider it as a minor hint, but its influence on crawling frequency is limited.

- Use accurate lastmod timestamps rather than auto-updating them on every crawl.

- Reserve priority values 0.8 and above for critical pages like homepage and cornerstone content.

- Set changefreq to “daily” or “weekly” for frequently updated sections, such as blogs or news pages.

When can you split your XML sitemap for better SEO?

For large websites, a single sitemap file may not be efficient. Google allows a maximum of 50,000 URLs per sitemap, but that doesn’t mean you should reach that limit.

- Segment sitemaps by content type (e.g., blog posts, product pages, category pages).

- For multilingual sites, create separate sitemaps for different language versions.

- Submit a sitemap index file that references multiple smaller sitemaps, making it easier to manage.

How can you use our free Sitemap Content Analyzer for SEO insights?

PEMAVOR’s Sitemap Content Analyzer helps SEO teams evaluate their website’s structure, uncover optimization opportunities, and refine their content strategies.

By using this tool, you can detect content gaps, keyword inconsistencies, and structural inefficiencies in competitors’ XML sitemaps. This way, you can create opportunities for better indexing and improved search visibility. Instead of manually reviewing sitemaps line by line, you can extract meaningful data to support data-driven content SEO decisions.

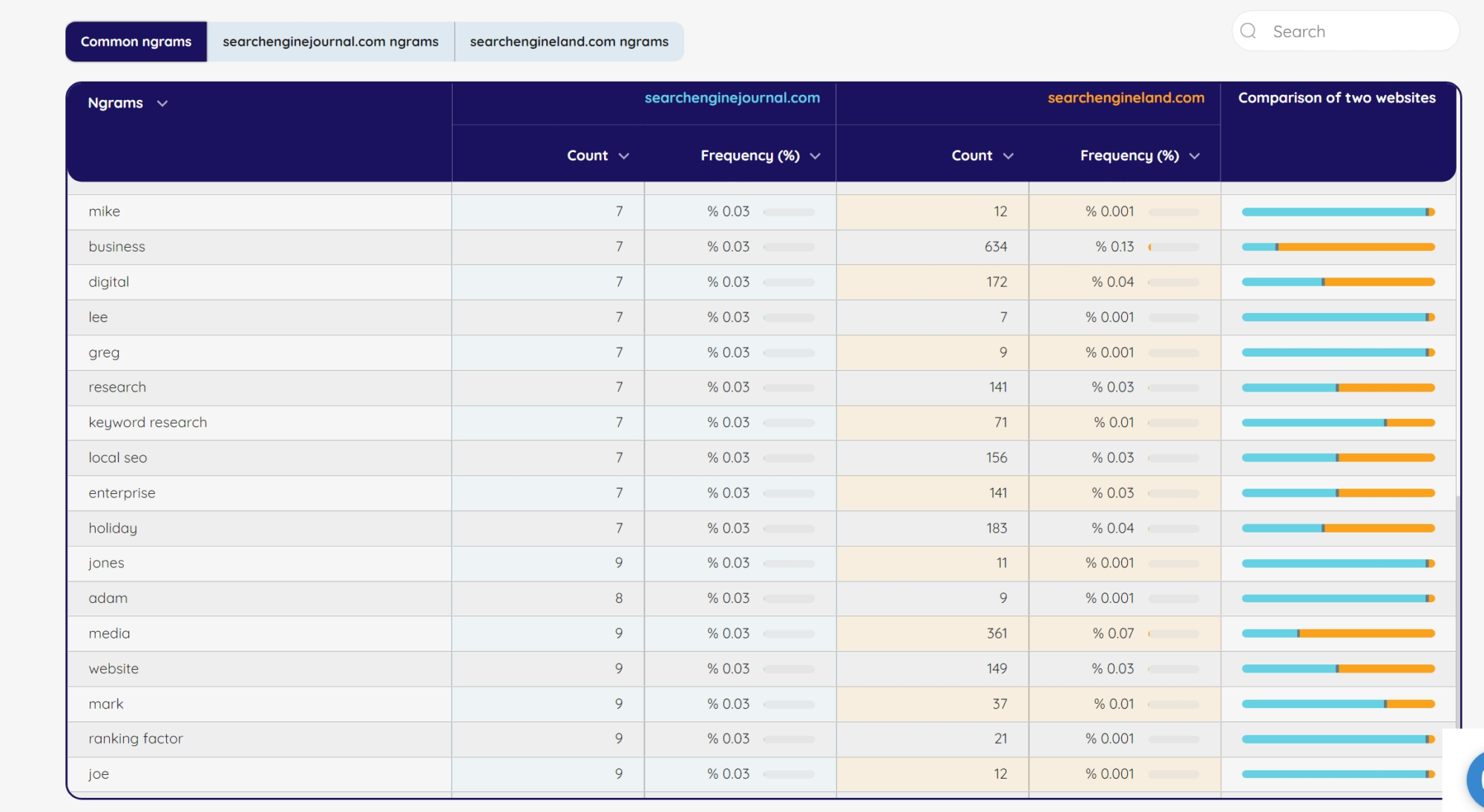

N-gram analysis for content patterns

A well-optimized XML sitemap should reflect a clear and consistent content strategy. The n-gram analysis feature scans page titles and URLs, identifying keyword trends and common patterns. This helps you spot redundant phrasing, missing target keywords, or inconsistencies that may impact rankings.

Competitor Content Comparison

When analyzing competitor sitemaps, they often follow standard naming conventions. Here are some common competitor sitemap structure examples:

https://competitor.com/sitemap.xmlhttps://competitor.com/sitemap_index.xmlhttps://competitor.com/sitemap-category.xml(For segmented sitemaps)https://competitor.com/sitemap-posts.xml(For blog posts)https://competitor.com/sitemap-products.xml(For e-commerce sites)

Also, you may find the sitemap URL inside the https://competitor.com/robots.txt.

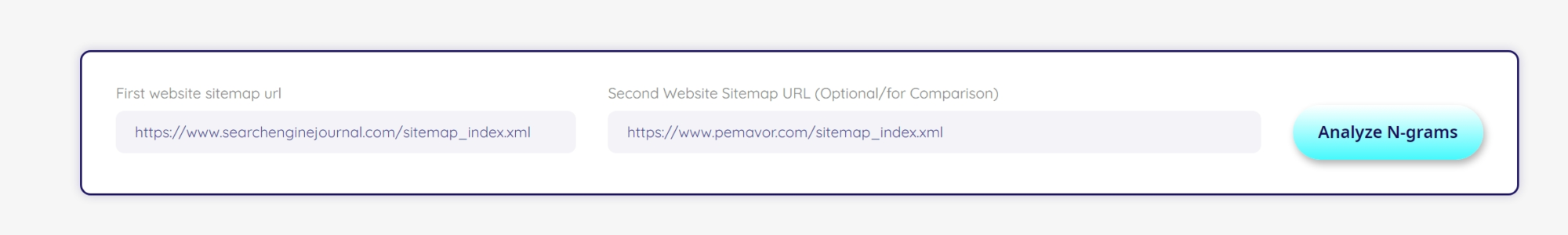

Now, let’s show how can you get data instantly.

Sitemap Content Analyzer gives you valuable insights into your competitor’s content strategy. This way, you can find ideas on how to improve your website’s content. After you get a list of keywords that your competitors have used, you get comparison results. It would be great to take note of the results that are most beneficial for your site, which means you can see where their content is doing well and where there is potential for improvement to your site.

#1: Enter your competitors’ sitemap. Also, you can compare your XML sitemap with your competitor’s.

#2: Analyze the result.

N-gram analysis (Common keywords & phrases): Terms like “business,” “digital,” “keyword research,” and “local SEO” suggest frequent content themes.

Comparison of keyword frequency across two websites: “business” appears 7 times (0.03%) on searchenginejournal.com (SEJ), but a significantly higher 634 times (0.13%) on searchengineland.com (SEL). This suggests SEL emphasizes “business” topics more than SEJ.

Comparison bars on the right: Longer bars indicate higher keyword usage on that website. For example: “media” has a significantly higher presence in SEL (361 mentions) than SEJ (9 mentions). This means SEL covers media-related topics more extensively than SEJ.

How can you use these insights for SEO strategy:

Content gaps & opportunities: If your website competes with these two, you can identify missing topics in your own content strategy.

Keyword prioritization strategy: SEJ and SEL emphasize different themes. For example: If your brand is in SEO tools or PPC marketing, and your competitors focus heavily on “business” or “media,” you might pivot content focus to differentiate yourself.

Competitor benchmarking: If your competitors prioritize certain keywords, industries, or content topics, it shows what they consider valuable for SEO and audience targeting.

- Identify underused but high-impact topics that your competitors cover more effectively.

- Use keyword insights to optimize on-page SEO, metadata, and internal linking.

- Run this analysis periodically to track shifts in competitor content priorities.

Try PEMAVOR’s Sitemap Content Analyzer to gain actionable insights and improve search performance.

FAQ

What is an XML sitemap in SEO?

When search engines like Google, Bing, or Yahoo! visit your site, they look for an XML sitemap to determine which pages to crawl. The sitemap provides metadata, such as the last modified date, priority level, and update frequency, giving search engines a clearer picture of your content structure.

How many sitemaps are there in SEO? And which sitemap is best for SEO?

There are several types of sitemaps, each serving a different purpose:

- XML Sitemaps: The standard format for search engines. They list URLs in an XML file to improve indexing. And XML is sitemap important for SEO.

- HTML Sitemaps: A user-friendly, on-page sitemaps that help visitors navigate a website.

- RSS/Atom Feeds: Used for blogs and news sites to signal new or updated content to search engines.

Why are XML sitemaps essential for SEO?

An XML sitemap helps search engines discover and index important pages, improving search visibility. Therefore, it’s a must-have for especially large websites, e-commerce stores, and content-heavy sites. Otherwise, some pages may be difficult to find solely through internal links.

Do XML sitemaps improve rankings?

XML sitemaps don’t directly boost rankings, but they help search engines discover and index pages more efficiently, which can improve visibility in search results.

How often should I update my sitemap?

It’s an automatic process, but you should audit your sitemap at least a few times a year. Why?

- To discover orphan URLs (URLs in your sitemap, but not linked internally on the site).

- Identify URLs found in a crawl, but are missing from your sitemap.

Should I include noindex pages in my sitemap?

No, noindex pages should be excluded from your sitemap to prevent sending mixed signals to search engines. Instead, include only indexable, high-value pages